AI and ChatGPT-like models are creating disruption to the world of software and have the potential to create even more. This is fascinating to observe, and speculate, but a part of me that retreats to what I know and can control. Sure I am mesmerized by the future potential of a personal AI Assistant, concerned about what it will do to disrupt companies I have invested in, contemplate the meaning to society and ponder the future of my work.

While there is a time for speculation, it also presents curiosity of what it can do for my projects today.

One application has been to leverage ChatGPT(and Bard) to help write code. I personally have limited coding expertise, but with enough time and effort I can usually make my way through some HTML, JavaScript, and CSS. AI assisted seems to be a game changer in this regard. Code that would have taken me a weekend to write can be generated in a 10 minute back and forth interaction. This will likely cause me to build some experiences that I might have bought previously.

For example, the Frugal for Fire list I created in AirTable, might have been coded specifically to my needs. Now I think there are a lot of benefits to the AirTable approach, but depending on the project the trade-off between building and buying it could start to become interesting. And for those who already possess the skills to build (eg Sr Web Dev), this will really be a powerful set of tools to multiple their contributions.

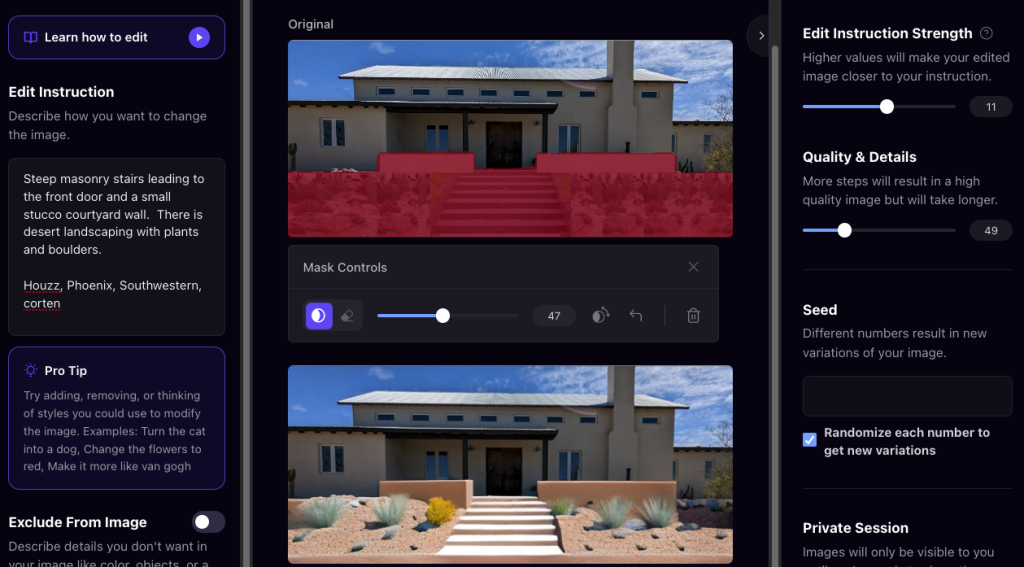

The other use that I have been exploring is a bit more novel. I am in the process of rerouting and landscaping my front drive. As I consider the entryway to my house I want to visualize different options(e.g. a small courtyard wall? ). I was able to take an existing photo, apply some “hints” of what I wanted and then have AI polish it.

1) The original… that is a lot of rocky rubble.

2) Here are some hints of what I want instead. This was done by searching the web for likenesses of what I wanted inserted. I was not too concerned about repetition or finish quality as the point was not to directly acquire the image, but to set the direction.

3) Lastly I used a Stable Diffusion model on AI Playground to process the image with a prompt.

It took a few iterations, but I was able to arrive at a realistic looking image that gives an idea of what I might go for. This is pretty crude to be sure, but I think that is okay.

It is important to explore how these new tools can be used before they are so commercialized and simplified that we can’t understand what is happening. It is this understanding that will better prepare makers for the future… to take advantage of the new tools, not lose the human in the loop and help prepare for broader implications.

While it is not clear what the future holds, I know that I will want to continue to make things. Whether it is software or physical, if AI can help me create, I am ready to learn.